AI can learn real-world skills by playing video games

StarCraft and Minecraft are good testing grounds for making artificial intelligence more humanlike

Artificial intelligence systems that play video games can pick up all kinds of real-world skills.

ryccio/Getty Images Plus; adapted by L. Steenblik Hwang

Dario Wünsch was feeling confident. The professional gamer was about to become the first to take on the artificial intelligence program AlphaStar in the video game StarCraft II. In this game, players command fleets of alien ships vying for territory. Wünsch, a 28-year-old from Germany, had been playing StarCraft II for nearly 10 years. No way could he lose this five-match challenge to a new AI gamer.

Even AlphaStar’s creators at the London-based company DeepMind weren’t hopeful about the outcome. Many researchers had tried to build an AI program that could handle this complex game. So far, none had created a system that could beat seasoned human players.

AlphaStar faced off against Wünsch in December 2018. At the onset of match one, the AI appeared to make a fatal mistake. It didn’t build a barrier at the entrance to its camp. This allowed Wünsch to invade the camp and destroy several of the AI’s worker units.

DeepMind/YouTube

For a minute, it looked like StarCraft II would remain one realm where humans best machines. Then AlphaStar made a comeback. It built a strike team that quickly wiped out Wünsch’s defenses. AlphaStar 1, Wünsch 0.

Wünsch shook off the loss. He just needed to focus more on defense. But in the second round, AlphaStar surprised the pro gamer. The AI did not attack until it had built an army. That army once again crushed Wünsch’s forces. Three matches later, AlphaStar had won the competition 5-0. Wünsch joined the small but growing club of world-class gamers bested by a machine.

Building AI gamers that can beat human players is more than a fun project. The goal is to use those programs to tackle real-world challenges, says Sebastian Risi. He is an AI researcher at IT University of Copenhagen in Denmark.

Educators and Parents, Sign Up for The Cheat Sheet

Weekly updates to help you use Science News Explores in the learning environment

Thank you for signing up!

There was a problem signing you up.

For instance, AIs built to play the online game Dota2 taught a robotic hand how to handle objects. California researchers at the San Francisco–based company OpenAI reported the findings in January. AlphaStar’s creators think their AI could help scientists with complex tasks. For instance, AIs might help simulate climate change or understand conversation.

Still, AIs struggle with two important things. The first is working with each other. The second is constantly applying new knowledge to new situations.

StarCraft games have proved great testing grounds on ways to help AIs work together. To figure out ways to make AIs forever learners, researchers are turning to another popular videogame: Minecraft. Some people may use screen time as a distraction from real life. But virtual challenges may help AI pick up the skills needed for real-world success.

Science News

Team play

When AlphaStar took on Wünsch, the AI played StarCraft II like a human would. It had complete control over all the characters in its fleet. But there are many real-world situations where relying on one AI to manage lots of devices would become unwieldy, says Jakob Foerster. He’s an AI researcher at Facebook AI Research in San Francisco.

Think of overseeing dozens of nursing robots caring for patients at a hospital. Or self-driving trucks coordinating their speeds across miles of highway to ease traffic jams. Foerster and other researchers are now using the StarCraft games to try out different “multiagent” strategies.

In some designs, individual combat units have some independence. But those units still answer to a single AI. In this setup, the AI acts like a coach shouting plays from the sidelines. The coach creates a big-picture plan and then issues its instructions to team members. Individual combat units use that guidance, along with observations of their immediate surroundings, to choose how to act.

Scientists in China tested the effectiveness of this strategy. They trained their AI team in StarCraft using reinforcement learning. It is a technique that allows computers to learn from examples or past experience.

In reinforcement learning, AIs pick up skills by interacting with their environment. In this case, it’s a StarCraft game. They will get virtual rewards after doing something right.

In the game, each AI teammate earns rewards based on the number of enemies it killed. Teammates also earn rewards if their entire team defeats game-controlled fleets.

On challenges with teams of at least 10 combat units, the coach-guided AI teams won 60 to 82 percent of the time. Teams completely loyal to their AI commander were less successful.

AI crews guided by a single commander may work best when the group can rely on fast, accurate communication among all agents. For instance, this system might work well for robots operating within the same warehouse.

Come together

For machines spread across vast distances, however, such as self-driving cars, it’s every AI for itself. Separate devices won’t have reliable and fast data connections to a single master AI, Foerster says.

AIs working under those limits generally can’t work together as well as will centralized teams. But Foerster’s group devised a way to train self-reliant machines to work together.

In this system, an AI commander offers feedback to AI teammates during reinforcement learning. After each trial run, the commander simulates alternative possible futures. It basically tells each team member, “This is what would have happened if everyone else had done the same thing, but you did something different.” This method helps each AI team member judge which actions help or hinder the group’s success.

Then, once these teams are fully trained, they are on their own. The master AI is less like a sidelined coach and more like a dance instructor. It offers pointers during rehearsals, but stays silent during the onstage performance.

To test the method, Foerster’s group trained three AI teams in StarCraft. Trained AI team members were forced to act based only on observations of their surroundings.

In combat rounds against a game-controlled opponent, all three AI groups won most of their rounds. The independent AIs performed about as well as three AI teams controlled by a master AI in the same combat scenarios. Foerster’s group presented its findings in February 2018 at a conference on artificial intelligence.

The building blocks of learning

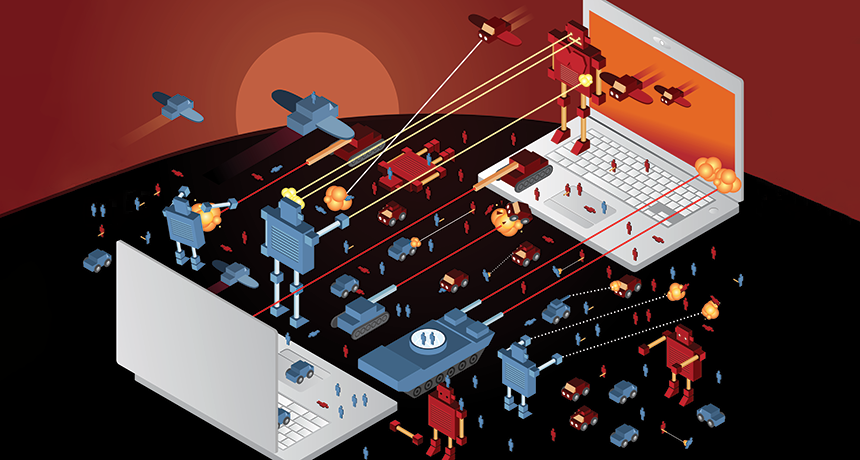

The types of AI training tested in StarCraft and StarCraft II are aimed at helping a team of AIs master a single task. It might be coordinating traffic lights or a fleet of uncrewed aircraft.

The StarCraft games are great for that because the goal is fairly basic: Each player has to overpower an opponent. But if AI is going to become more humanlike, it will need to be able to learn more and keep picking up new skills.

Most AI gamers are trained to only do one task well, says Risi, the AI researcher in Denmark. “Then they’re fixed so they can’t change,” he says. Training AI gamers to do a different task in the StarCraft games would require going back to square one. A better place for testing ways to make AI adaptable is the Lego-like realm of Minecraft.

This virtual world is made up of 3-D blocks of dirt, glass and other materials. Unlike StarCraft, Minecraft poses no single task for players. Instead, players gather resources to build structures, travel, hunt for food and do pretty much whatever else they please.

Caiming Xiong is an AI researcher at Salesforce. It’s a software company based in San Francisco, Calif. His team used a simple building full of blocks in Minecraft to test an AI that had been designed to continually learn.

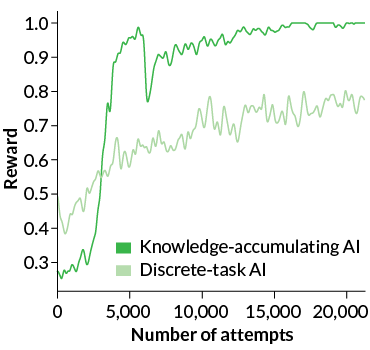

The researchers guided the AI through reinforcement learning challenges that became ever more. It had been designed to break each challenge into simpler steps. The AI then could tackle each step using skills it already had — or it could try something new. This AI completed challenges quicker than another AI that was not designed to use prior knowledge.

The knowledge-gathering AI was also better at adjusting to new situations. Xiong’s team taught both AIs how to pick up blocks. While training in a simple room that contained only one block, both AIs got the “collect item” skill down pat. But in a room with multiple blocks, the AI trained to do only one task struggled to identify its target. It grabbed the right block only 29 percent of the time.

The knowledge-gathering AI knew to locate a target object by relying on a “find item” skill it had already learned. It picked up the right block 94 percent of the time. The research was presented in Vancouver, Canada, at a scientific conference in May 2018.

With further training, the team’s system could master more skills. But in this system, the AI can only learn tasks that researchers tell it to do during training. Humans don’t have this kind of learning cutoff. When people finish school, “it’s not like, ‘Now you’re done learning. You can freeze your brain and go,’” Risi explains.

Lifelong learning

A better AI would get basic skills in games and simulations and then continue learning throughout its lifetime, says roboticist Priyam Parashar. She works at the University of California, San Diego.

A household robot, for example, should be able to find its way around new obstacles like baby gates or rearranged furniture. Her team created an AI that without human input can identify when it needs further training. Each time the AI runs into a new obstacle, it takes stock of how its surroundings are different from what it expected. Then it devises various workarounds and calculates the outcome of each. Afterward, it chooses the best solution.

Parashar’s team tested this AI in a two-room Minecraft building. The AI had been trained to get a gold block from the second room. But another Minecraft player had blocked the doorway with a glass barrier. At first, this stopped the AI from getting the gold block. The AI then assessed the situation. It used reinforcement learning to figure out how to shatter the glass and get the gold.

The researchers reported their findings in the 2018 Knowledge Engineering Review.

Prashar admits that an AI faced with a surprise baby gate or glass wall should not conclude that the best option is to bust it down. But programmers can set limits for AIs’ decision-making processes, she says. For instance, researchers can tell an AI that valuable or owned objects should not be broken.

New video games are becoming AI test-beds all the time. One such game is Overcooked. This team-cooking game takes place in a tight, crowded kitchen. There, players are constantly getting in each other’s way. AI and games researcher Julian Togelius hopes to use the game to test collaborating AIs. “Games are designed to challenge the human mind,” says Togelius, of New York University in New York City.

Any video game is a test for how much AI can mimic human cleverness. But when it comes to testing AI in video games or other simulated worlds, Prashar notes, “you cannot ever say, ‘OK, I’ve modeled everything that’s going to happen in the real world.’” Bridging the gap between virtual and physical reality will take more research.

One way to keep game-trained AIs from going too far, she says, is to have them ask humans for help when it’s needed. “Which, in a sense, is making [AI] more like humans, right?” she says. “We get by with the help of our friends.”