A sense of touch could upgrade virtual reality, prosthetics and more

Researchers also hope to bring ‘touch’ to such things as online shopping and doctor visits

Researchers are trying to make it possible to sense textures on a computer screen and with artificial limbs.

Hand: Image Source/ Getty Images Plus; Sweaters: CerebroCreative/iStock/Getty Images Plus; Phone: Issarawat Tattong/Getty Images Plus; T. Tibbitts

On most mornings, Jeremy D. Brown eats an avocado. But first, he gives it a little squeeze. A ripe avocado will yield to that pressure, but not too much. Brown also weighs the fruit in his hand. He feels the waxy skin’s bumps and ridges.

“I can’t imagine not having the sense of touch to be able to do something as simple as judging the ripeness of that avocado,” says Brown. He’s a mechanical engineer at Johns Hopkins University. That’s in Baltimore, Md. Brown studies haptic feedback. That’s information conveyed through touch.

Many of us have thought about touch more than usual during the COVID-19 pandemic. Hugs and high fives have been rare. More online shopping has meant fewer chances to touch things before buying. People have missed out on trips to the beach where they might have sifted sand through their fingers. A lot goes into each of those sensory acts.

Our sense of touch is very complex. Every sensation arises from thousands of nerve fibers and millions of brain cells, explains Sliman Bensmaia. He’s a neuroscientist at the University of Chicago in Illinois. Nerve receptors detect cues about pressure, shape, motion, texture, temperature and more. Those cues activate nerve cells, or neurons. The central nervous system interprets those patterns of activity. It tells you if something is smooth or rough, wet or dry, moving or still.

Neuroscience is at the heart of research on touch. But Brown and other engineers study touch, too. So do experts in math and materials science. They want to translate the science of touch into helpful applications. Their work may lead to new technologies that mimic tactile sensations.

Some scientists are learning more about how our nervous system responds to touch. Others are studying how our skin interacts with different materials. Still others want to know how to produce and send simulated touch sensations.

All these efforts present challenges. But progress is underway. And the potential impacts are broad. Virtual reality may get more realistic. Online shoppers might someday “touch” products before buying them. Doctors could give physical exams online. And people who have lost limbs might regain some sensation through prostheses.

Good vibrations

Virtual reality is already pretty immersive. Users can wander through the International Space Station. Or, they can visit Antarctica. These artificial worlds often have realistic sights and sounds. What they lack is realistic touch. That would require reproducing the signals that trigger haptic — or touch — sensations.

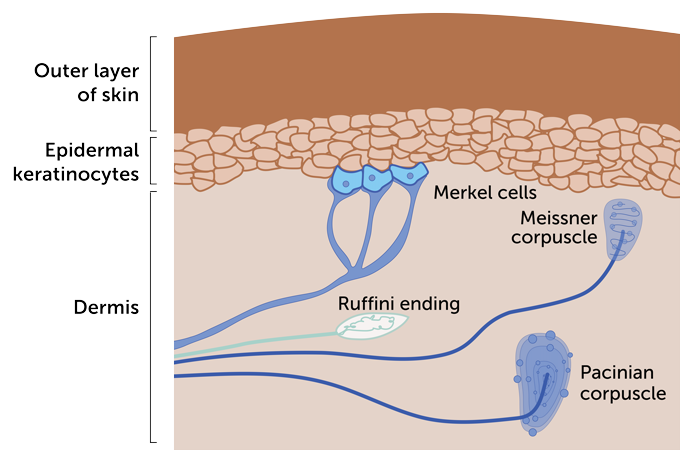

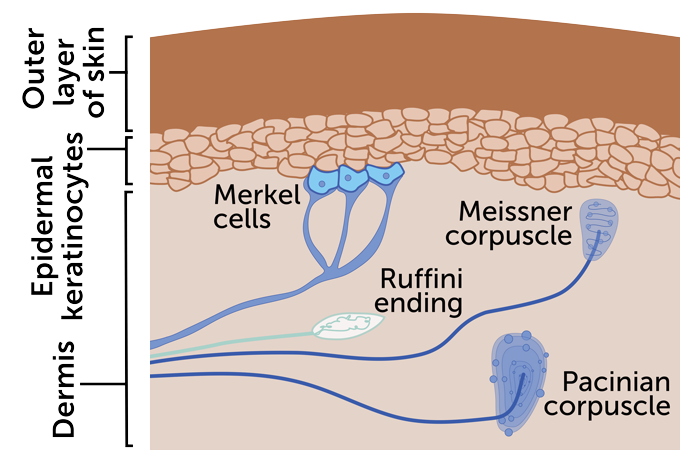

Our bodies are covered in nerve endings that respond to touch. Some receptors track where our body parts are. Others sense pain and temperature. One goal for researchers is to mimic sensations that arise from force and movement. Those include pressure, sliding and rubbing.

A few different types of receptors respond to force and movement. One is the Pacinian corpuscles that lie deep within the skin. They are especially good at picking up vibrations caused by touching different textures. When stimulated, these receptors send signals to the brain. The brain interprets those signals as a texture. Bensmaia compares this to hearing a series of notes and recognizing a tune.

Deep feelings

Four main types of touch receptors respond to a mechanical stimulation of the skin. They are known as Meissner corpuscles, Merkel cells, Ruffini endings and Pacinian corpuscles. Some respond better than others to certain types of stimuli. Recent studies have focused on the deep-skin Pacinian corpuscles. Those receptors respond to vibrations created as fingers rub against textured materials.

“Corduroy will produce one set of vibrations,” Bensmaia says. Types of silk produce other sets. Scientists can measure those sets of vibrations. That work is a first step toward reproducing the feel of different textures.

But creating the right vibration pattern is not enough. Any stimulation meant to mimic a texture must be strong enough to trigger the skin’s touch receptors. And researchers are still figuring out how strong is strong enough.

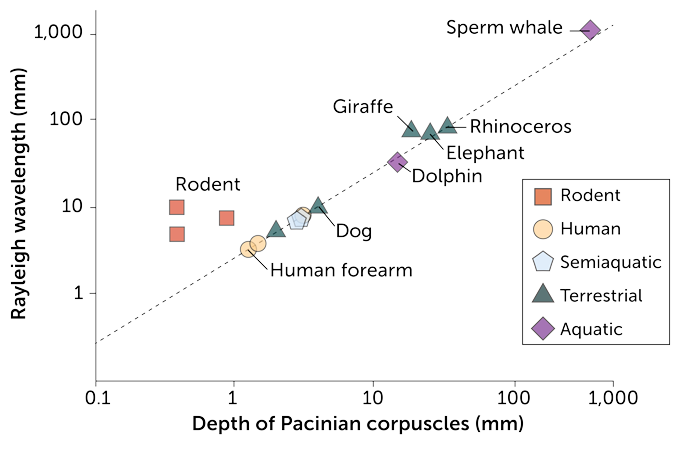

For instance, vibrations caused by textures create different types of wave energy. One team found that rolling-type waves called Rayleigh (RAY-lee) waves go deep enough to reach Pacinian receptors. (Much larger versions of those waves ripple through Earth during earthquakes.) The team shared this finding last October in Science Advances.

Universality of touch

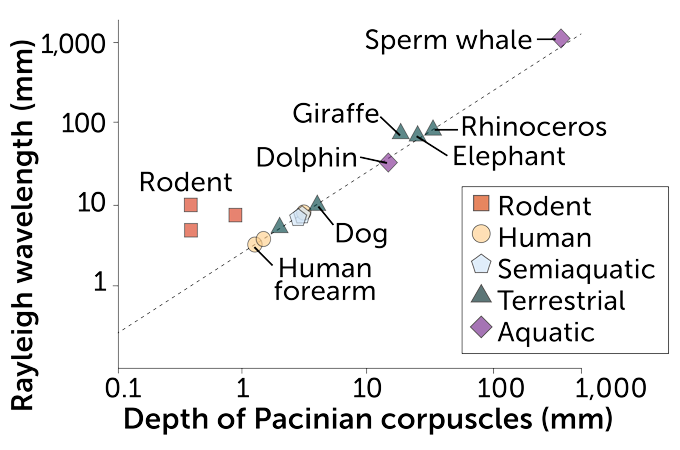

This graph depicts a “universal scaling law.” It show how long the wavelength of Rayleigh waves need to be to trigger Pacinian touch receptors in a mammal’s skin. The thicker a species’ skin, the longer those waves must be for certain “touches” to be felt. The relatively shallow receptors in human skin, for instance, respond to shorter waves than the deeper ones in sperm-whale skin. This trend holds true for many mammals — but not very small ones, such as mice.

Ratio of Rayleigh wavelength to touch receptor depth in various mammals

The size of Rayleigh waves also matters. For the most part, those waves must be at least 2.5 times as long as the depth of those Pacinian receptors in the skin. That’s enough for a person — and most other mammals — to feel a sense of touch through those receptors, explains James Andrews. He’s a mathematician at the University of Birmingham in England. His team discovered this rule by looking at studies that involved animals — including dogs, dolphins and rhinos.

This work helps reveal what it takes to realistically capture touch, Andrews says. New devices could use such information to convey touch sensations to users. Some might do this using ultrasonic waves or other techniques. And that might someday lead to virtual hugs and other tactile experiences in virtual reality.

Online tactile shopping

Cynthia Hipwell moved into a new house before the pandemic. She looked at some couches online but she couldn’t bring herself to buy one from a website. “I didn’t want to choose couch fabric without feeling it,” says Hipwell. A mechanical engineer, she works at Texas A&M University in College Station.

She imagines that one day, “if you’re shopping on Amazon, you could feel fabric.” We’re not there yet. But touch screens that mimic different textures are being developed. What may make them possible is harnessing shifts in electrical charge or vibrations. Touching the screen would then tell you whether a sweater is soft or scratchy. Or if a couch’s fabric feels bumpy or smooth.

Educators and Parents, Sign Up for The Cheat Sheet

Weekly updates to help you use Science News Explores in the learning environment

Thank you for signing up!

There was a problem signing you up.

To make that happen, researchers need to know what affects how a screen feels. Surface features that are nanometers (billionths of a meter) high affect a screen’s texture. Tiny differences in moisture also change a screen’s feel. That’s because moisture alters the friction between your fingers and the glass. Even shifts in electrical charge play a role. These shifts change the attraction between finger and screen. Such attraction is called electroadhesion (Ee-LEK-troh-ad-HEE-shun).

Hipwell’s group built a computer model that accounts for those effects. It also accounts for how someone’s skin squishes when pressed against glass. The team shared its work in the March 2020 IEEE Transactions on Haptics.

Hipwell hopes this computer program can help product designers make screens that can provide a sense of what a displayed object might feel like. Those screens could be used for more than online shopping. A car’s dashboard might have sections that change texture for each menu, Hipwell says. A driver might change temperature settings or radio stations by touch while keeping her eyes on the road.

Wireless touch patches

Virtual doctor visits rose sharply during early days of the COVID-19 pandemic. But online appointments have limitations. Video doesn’t let doctors feel for swollen glands or press an abdomen to check for lumps. Remote medicine with a sense of touch might help at times when the doctor and patient can’t meet up in person. And it could be useful for people who live in areas far from doctors.

People in those places might someday get touch-sensing equipment at home. So might a pharmacy clinic, workplace — even the International Space Station. A robot, glove or other tool with sensors might then touch a patient’s body. The information it collected could then be relayed to a device somewhere else. A doctor at that distant location could then feel like they’re touching the patient.

The holdup right now is crafting the devices needed to translate data on touch into sensations. One option is a flexible patch that attaches to the skin. It’s upper layers hold a stretchy circuit board and tiny vibrating actuators to the patch. Wireless signals — created when someone touches a screen or device elsewhere — control the device. Energy to run the patch can be delivered wirelessly, notes John Rogers. He’s a physical chemist leading the device’s development at Northwestern University in Evanston, Ill. The group reported its initial progress two years ago in Nature.

Rogers’ team has since made its patch thinner and lighter. It also gives the wearer more detailed touch information. Plus, the latest version comes in custom sizes and shapes. Up to six patches can work at the same time on different parts of the body.

The researchers wanted to make the patch work with common electronics. For that, the team created a program to send sensations from a touch screen to the patch. As one person moves her fingers across a smartphone or touch-screen computer, another person wearing the patch can feel that touch. So a child might feel a mom stroking his back. Or a patient might feel a doctor poking to see where their skin feels tender.

Pressure points

Rogers’ team believes its patch could upgrade artificial limbs, too. The patch can pick up signals from pressure on a prosthetic arm’s fingertips. Those signals can then be sent to a patch worn by the person with the artificial limb.

Other researchers also are testing ways to add tactile feedback to the artificial body parts. This could make the devices more user-friendly. For example, adding pressure and motion feedback helped people with an artificial leg walk with more confidence. The 2019 device also reduced the pain from phantom limbs.

Brown, the Johns Hopkins engineer, hopes to help people control the force of their artificial limbs. Nondisabled people adjust their hands’ force on instinct. For example, Brown takes his young daughter’s hand in a parking lot. If she starts to pull away, he gently squeezes. But he might hurt her if he couldn’t sense the stiffness of her hand.

Brown’s group tested two ways to give users feedback on the force exerted by their electronic limbs. In one, a device squeezed the user’s elbow. The other used a vibrating device strapped on near the wrist. The stiffer an object touched by the artificial limb was, the more pressure or greater the vibrations there were. Volunteers who had not lost limbs tried each setup. The test involved judging the stiffness of blocks with an artificial lower arm and hand.

Both types of feedback worked better than no feedback. But neither type seemed better than the other. “We think that is because, in the end, what the human user is doing is creating a map,” Brown says. Basically, people’s brains match up how much force corresponds to the intensity of each type of feedback. Brown and his colleagues shared this finding two years ago in the Journal of NeuroEngineering and Rehabilitation.

But the brain may not be able to correctly map all types of touch feedback from artificial body parts. One team in Sweden built bionic hands with touch sensors on the thumb. They sent signals to an electrode implanted around the user’s ulnar nerve. That’s in the arm. So, the feedback went directly into the nervous system.

Three people who had lost a hand tested these bionic hands. Users did feel a touch when the thumb was prodded. But that touch felt as if it came from somewhere else on the hand. The mismatch did not improve even after more than a year of use. Bensmaia was part of a team that shared the finding last December in Cell Reports.

That mismatch may have arisen because the team couldn’t match the touch signal to the right part of the nerve. Many bundles of fibers make up each nerve. Different bundles in the ulnar nerve receive and send signals to different parts of the hand. But the implanted electrode did not target the specific bundle of fibers that maps to the thumb.

Studies published in the past two years, though, have shown that people using these bionic hands could better control their grip than when they had used them with only feedback at the upper arm’s surface. People getting the direct nerve stimulation also reported feeling as if the hand was more a part of them.

As with the bionic hands, future haptic technology will likely need substantial refining to get things right. And virtual hugs and other simulated touch may never be as good as the real thing. But haptics may provide new ways to explore our world and stay in touch — both literally and virtually.