Teen-designed tech could expand access for people with disabilities

Regeneron ISEF competition showcased sign-language translators, ‘Mind Beacon’ and more

Millions of people around the world are affected by visual and hearing impairments. For many of them, access still remains an issue. Teens at the 2022 Regeneron ISEF competition presented new technology to address such barriers.

Richard Hutchings/Corbis Documentary/Getty Images Plus

By Anna Gibbs

ATLANTA, Ga. — While procrastinating on homework, Seoyoung Jun closed one eye and successfully picked up her pencil holder. She realized that orienting herself in a 3-D space didn’t require both eyes. This surprised her. With research, she learned that the brain can process 3-D information without any vision at all. And with that, the idea for Mind Beacon was born.

Visually-impaired people get their spatial information mainly from touch, not vision. And this sophomore at Thomas Jefferson High School for Science and Technology in Alexandria, Va., based her new navigation device on that idea. It can help blind people get a read on what obstacles lay ahead.

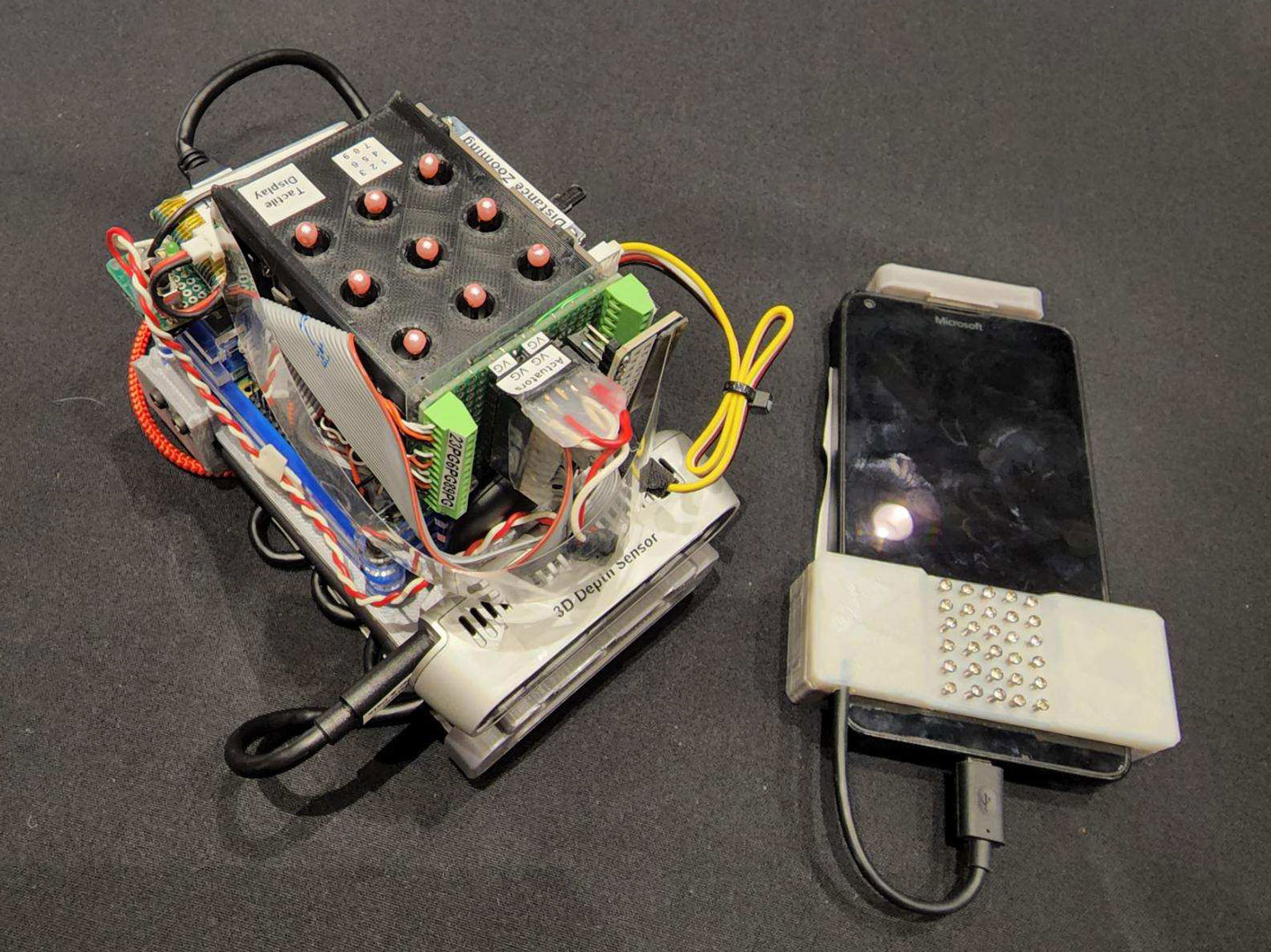

The portable device uses a beam of infrared light to gather 3-D information about the placement of walls, beams, furniture and other things. The bounced beam conveys that information back to the device, which then raises little pins to indicate where those obstacles are. People who are visually impaired can “read” the position of those pins to understand the layout of structures and objects ahead — and navigate around them.

When Seoyoung’s device worked for the first time, she didn’t believe it. She’d been up all night working on it. To be sure it wasn’t a mistake, she shut it off and rebooted. When it worked again, she recalls, “I just started screaming [with joy].” It had been seven long months of research, building and testing. Her excitement woke her parents, who asked her to please pipe down. So she jotted down some notes and then, finally, went to sleep.

This portable device to help visually-impaired people create a mental image of their surroundings won the 16-year-old a spot at the world’s premier high-school research competition. She joined some 1,750 other finalists, last week, at the 2022 Regeneron International Science and Engineering Fair. Roughly 600 of those teens shared nearly $8 million in prizes.

A number of those finalists wowed judges and members of the public with new tech to assist people with sensory disabilities.

A map that works like braille

The heart of Mind Beacon is a 3-D depth sensor that’s hooked up to a mini computer. Seoyoung coded that computer to control nine motors that are lined up in three rows of three. Each motor controls a pin that can move up and down. The height of each pin conveys data on 2.25 cubic feet (0.06 cubic meter) of space in front of the sensor. On the computer screen, those nine blocks of space represent “volume boxes.” Together, they look something like a 3-D strike box in baseball.

The idea for the display was inspired by her brother playing Minecraft, Seoyoung explains. That computer game uses “multiple cubes put together,” she says. She realized that would be a good way to depict spatial data in real life.

When the sensor detects an obstacle in a volume box, the corresponding pin rises. It can rise to three different heights. Each height roughly denotes the height of the obstacle. Someone who runs their hand over the nine pins can tell where the obstacles are and their general height. To increase the display area even more, Seoyoung added a zoom lever. It relays data from volume boxes that are even farther away.

She imagines a future version that could hook up to a smartphone, with more little pins that can mimic the exact height of obstacles. It would feel like a little map depicting the location and size of obstacles ahead.

Seoyoung’s work took second place in the fair’s Robotics and Intelligent Machines category. That award came with a $2,000 prize.

AI for ASL

As a coder, Nand Vinchhi wanted to invent something that didn’t require a device. While brainstorming with friends at a hackathon, the group came up with the idea for a platform to translate sign language. In the end, the team decided not to develop it. But Nand couldn’t stop thinking about the translator.

“I’ve always been super interested in a better education system for everyone, especially in my country,” where there’s a lot of inequality, he says. This 17-year-old attends the National Public School, Koramangala in Bangalore, India. “I wanted to do something through technology that would help.”

In just a few months, he developed the artificial-intelligence technology needed to translate American Sign Language (ASL) in real-time. It’s basically a speech-to-text app for sign language. Here, hand movements are the speech.

The hardest part, Nand says, was figuring how to approach the problem. At times, he says, “I didn’t know what to do.” When that happened, he would go to sleep. “In the morning, I tried again.”

For Nand, “sleeping on it” paid off. He decided to pinpoint body parts important in signing. This might be individual fingertips, for instance. Using a machine-learning program, he then taught a computer to identify those key points in videos he took. The computer would compile data from many frames of the video. This created a dataset.

Nand gathered lots of these datasets and then used an algorithm to look for differences between them. That allowed him to compare a sign to any of some 100 ASL words in that dataset.

His system now translates signs with 90.4 percent accuracy, he reported at the competition. The delay from signing to translation is only about three-tenths of a second. That’s only a tenth of a second longer than the typical speech delay in video calls. The teen now plans to boost the accuracy and words available in the dataset.

Nand imagines the technology could be integrated someday into existing communication platforms, such as Zoom, or into online platforms such as Google Translate. That could help ASL speakers communicate with those who don’t know the language. Another promising application, he thinks, is as an education tool for people who want to learn ASL, he says — such as Duolingo.

Educators and Parents, Sign Up for The Cheat Sheet

Weekly updates to help you use Science News Explores in the learning environment

Thank you for signing up!

There was a problem signing you up.

Shoes and gloves

Sisters Shanttale and Sharlotte Aquino Lopez worked on a related communications problem. But these engineers from the San Juan Math, Science and Technology Center in San Juan, Puerto Rico took a totally different approach. Shanttale, 18, and Sharlotte, 16, wanted to help break the barrier between those who use ASL and those who don’t know it. The glove they developed can help anyone learn basic ASL.

Sensors they put on fingers of the glove relay motion data that reflect the shape of the glove. The glove then conveys those signals to a circuit board, which translated the input into a letter on a screen. The idea is that the glove can help users visualize the signs they’re learning. In time, users should be able to understand ASL as signed by others — and “speak” it themselves.

Fifteen-year-old Joud Eldeeb built a shoe for visually-impaired people. This young engineer attends Haitham Samy Hamad in Damanhur, Egypt. She embedded sensors in the footwear that vibrate, ding and send a text to alert the wearer of upcoming obstacles.

What these projects all have in common is a creative new use of computer technology to make the world more accessible to people with disabilities. Computer programming is the future, says Joud. “I want to learn it to help the world.”