Moral dilemma could limit appeal of driverless cars

In emergencies, should a car save passengers or pedestrians

A car with self-driving technology sits at NASA’s Ames Research Center. Driverless cars will need to be programmed to handle emergency situations, but surveys find people have conflicting opinions on whether automated vehicles should protect pedestrians or passengers.

NASA/Ames/Dominic Hart

By Bruce Bower

Self-driving cars are just around the corner. Such vehicles will make getting from one place to another safer and less stressful. They also could cut down on traffic, reduce pollution and limit accidents. But how should driverless cars handle emergencies? People disagree on the answer. And that might put the brakes on this technology, a new study concludes.

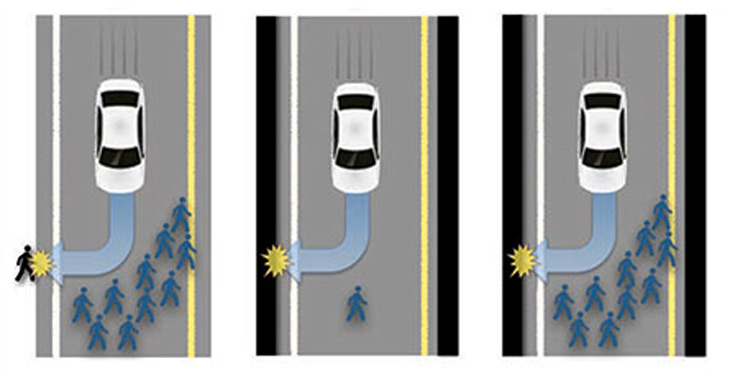

To understand the challenge, imagine a car that suddenly meets some pedestrians in the road. Even with braking, it’s too late to avoid a collision. So the car’s artificial intelligence must decide whether to swerve. To save the pedestrians, should the car veer off the road or swerve into oncoming traffic ? What if such options would likely kill the car’s driver?

Researchers used online surveys to study people’s attitudes about such situations with driverless cars. They ran six surveys between June and November 2015. A total of 1,928 Americans shared their views.

Survey participants mostly agreed that automated cars should be designed to protect the most people. That included swerving into walls (or otherwise sacrificing their passengers) to save a larger number of pedestrians. But there’s a hitch. Those same surveyed people want to ride in cars that protect passengers at all costs — even if the pedestrians would now end up dying. Jean-François Bonnefon is a psychologist at the Toulouse School of Economics in France.

He and his colleagues reported their findings June 24 in Science.

“Autonomous cars can revolutionize transportation,” says study coauthor Iyad Rahwan. He’s a cognitive scientist at the University of California, Irvine and at the Massachusetts Institute of Technology in Cambridge. But, he adds, this new technology creates a moral dilemma that could slow its acceptance.

Makers of computerized cars are in a tough spot, Bonnefon’s group warns. Most buyers would want their car to be programmed to protect them in preference to other people. However, regulations might one day instruct that cars must act for the greater good. That would mean saving the most people. But the scientists think rules like this could backfire, driving away buyers. If so, all the potential benefits of driverless cars would be lost.

Auto motives

Automated cars will need to respond to emergencies without being sure how its decisions will play out. For example, should a car avoid a motorcycle by swerving into a wall? After all, the car’s passenger is more likely to survive a crash than is the motorcyclist.

“Before we can put our values into machines, we have to figure out how to make our values clear and consistent,” says Joshua Greene. This Harvard University philosopher and cognitive scientist wrote a commentary in the same issue of Science.

People have always faced moral dilemmas. Sometimes they’re unavoidable, says Kurt Gray. He’s a psychologist at the University of North Carolina in Chapel Hill. People may have opposite beliefs depending on the situation. For example, they may believe in saving others — until it means risking their own lives.

Compromises might be possible, Gray says. He thinks that even if all driverless cars are programmed to protect their passengers in emergencies, traffic accidents will decline. Those vehicles might be dangerous to pedestrians on rare occasions. But they “won’t speed, won’t drive drunk and won’t text while driving, which would be a win for society.”

Moral math

In the surveys, most people didn’t think cars should sacrifice a passenger to save just one pedestrian. But they changed their minds depending on the number of pedestrians’ lives that could be saved. For instance, about three out of every four volunteers in one survey said it was better for a car to sacrifice one passenger than to kill 10 pedestrians. That trend held even when volunteers imagined they were in a driverless car with their family members. Bonnefon does not think those participants were just trying to impress researchers with “noble” answers.

But their opinions changed when people imagined actually buying a driverless car. In another survey, people again said pedestrian-protecting cars were more moral. But most of those same people admitted that they wanted their own car to be programmed to protect its passengers. Other people thought driverless cars that swerved to avoid pedestrians would be good for others to drive — but they wouldn’t buy one themselves.

What about a law forcing either human drivers or automated cars to swerve to avoid pedestrians? People who took the survey were lukewarm about this idea. Even if a passenger-sacrificing car could save the lives of 10 pedestrians, participants didn’t feel strongly that this should be a law.

A final survey had people imagine scenarios in which they were riding alone, with a family member or with their child. Afterward, they were much less eager to buy an automated car that would save pedestrians. Protecting themselves and their families, it seemed, was the most powerful driver.